In this AWS re:Post Article, I describe how I test changes to the HCX provider for VMC On AWS.

Tag: VMware

A Go novice’s experience with Terraform providers

In this AWS re:Post Article, I explain how I added support for M1 Macs to an open source Terraform provider […]

Cloning a VM using pyVmomi in VMware Cloud on AWS

There are many ways to automate clone a virtual machine, including PowerCLI and the pyVmomi library for Python. In this […]

Using PowerCLI to import and export VMs with VMware Cloud on AWS

PowerCLI is commonly used by vSphere admins to automate tasks. In this AWS re:Post Article, I demonstrate importing and exporting […]

Automating the account linking process in VMware Cloud on AWS

In this AWS re:Post Article, I explore automating a typically manual process – linking a VMware Cloud on AWS org […]

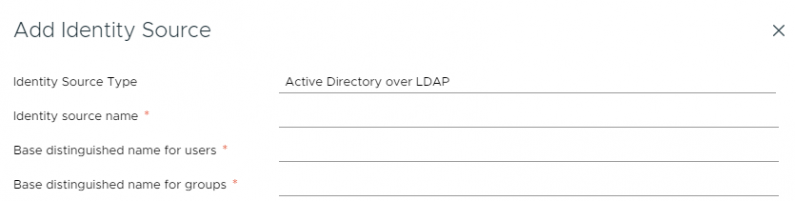

vCenter Roles with LDAP credentials in VMware Cloud on AWS

In this AWS re:Post Article, I demonstrate how to ensure your LDAP administrative users have the same permissions level as […]

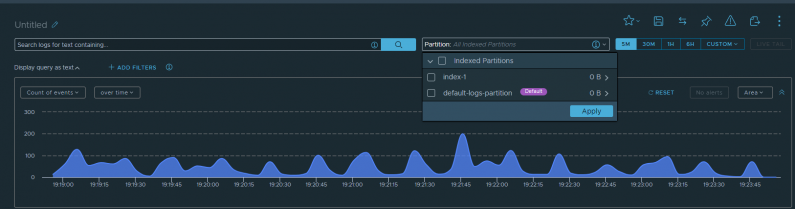

Log Forwarding and Retention with vRealize Log Insight Cloud

In this AWS re:Post Article, I cover a request that one of my customers had yesterday. They need to forward […]

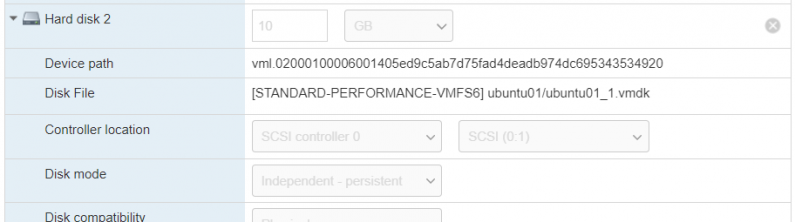

AWS Elastic Disaster Recovery with VMware Raw Device Mappings

In this re:Post Article, I demonstrate using AWS Elastic Disaster Recovery to fail over a filesystem backed by a physical […]

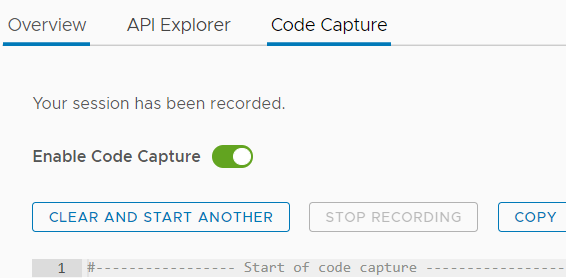

Using Code Capture to decipher VMware APIs

Now that I work at AWS, most of my content will be published on AWS-owned repositories. AWS re:Post is a […]

Invoke-GetVMGuestLocalFilesystem displays no data

I had a customer report the following in VMware {Code} Slack: Hello! I’m trying to automate the increment of disk […]