I had a customer report the following in VMware {Code} Slack: Hello! I’m trying to automate the increment of disk […]

Category: PowerCLI

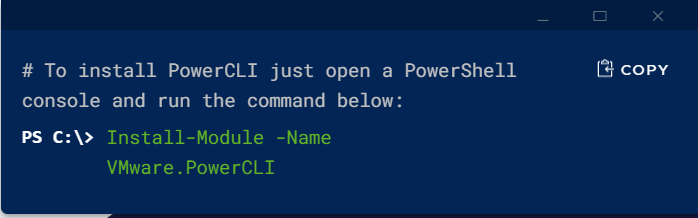

VMware PowerCLI – the Powershell command-line interface for VMware.

Monitor Horizon logins via Slack webhook

Nico and I are working on a training idea we’re calling Start Coding: Today! The idea is that anybody can […]

Unable to install PowerCLI

I was in an older VDI template trying to add the PowerCLI module with the Import-Module VMware.PowerCLI command. I received […]

VMware Event Broker Appliance – Part VII – Deploy the Sample Host Maintenance Function (OpenFaaS)

In Part VI of this series, we showed how to sync a fork of our VEBA repository to the upstream […]

Moving VMs to a different vCenter

I had to move a number of clusters into a different Virtual Center and I didn’t want to have to […]

Moving PVS VMs from e1000 to VMXNET3 network adapter

A client needed to remove the e1000 NIC from all VMs in a PVS pool and replace it with the […]

Mass update VM description field

I had a need to update description fields in many of my VMs and the vSphere client doesn’t exactly lend […]

vSphere Datastore Last Updated timestamp – Take 2

I referenced this in an earlier post, but we continue to have datastore alarm problems on hosts running 4.0U2 connected […]

Guest NICs disconnected after upgrade

We are upgrading our infrastructure to ESXi 4.1 and had an unexpected result in Development cluster where multiple VMs were […]

PowerCLI proxy problems

oday, I couldn’t connect to my vCenter server using Connect-VIServer. It failed with “Could not connect using the requested protocol.” […]