In this AWS re:Post Article, I demonstrate using a new feature in the AWS Terraform provider. The lifecycle scope feature […]

Tag: VMC

A Go novice’s experience with Terraform providers

In this AWS re:Post Article, I explain how I added support for M1 Macs to an open source Terraform provider […]

Cloning a VM using pyVmomi in VMware Cloud on AWS

There are many ways to automate clone a virtual machine, including PowerCLI and the pyVmomi library for Python. In this […]

Automating the account linking process in VMware Cloud on AWS

In this AWS re:Post Article, I explore automating a typically manual process – linking a VMware Cloud on AWS org […]

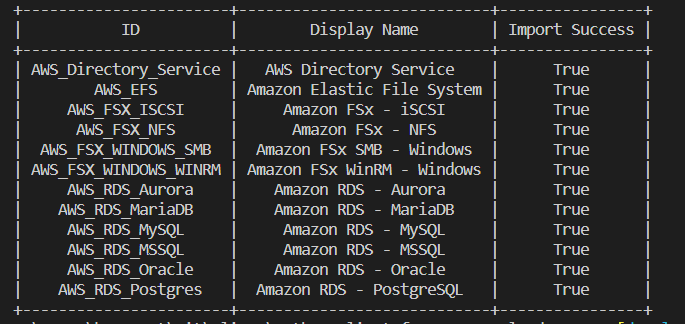

Custom service definitions in VMware Cloud on AWS

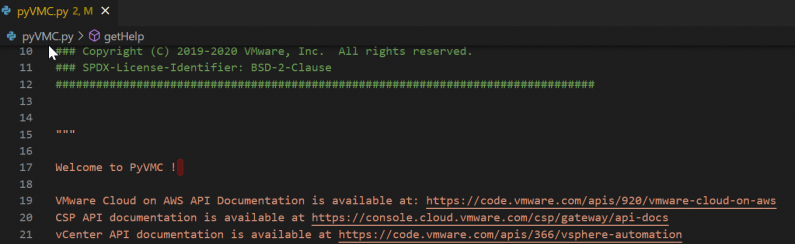

In this re:Post Article, I walk through a feature I contributed to the Python Client for VMware Cloud on AWS […]

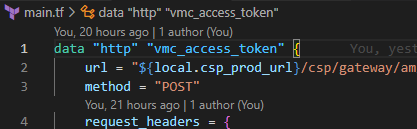

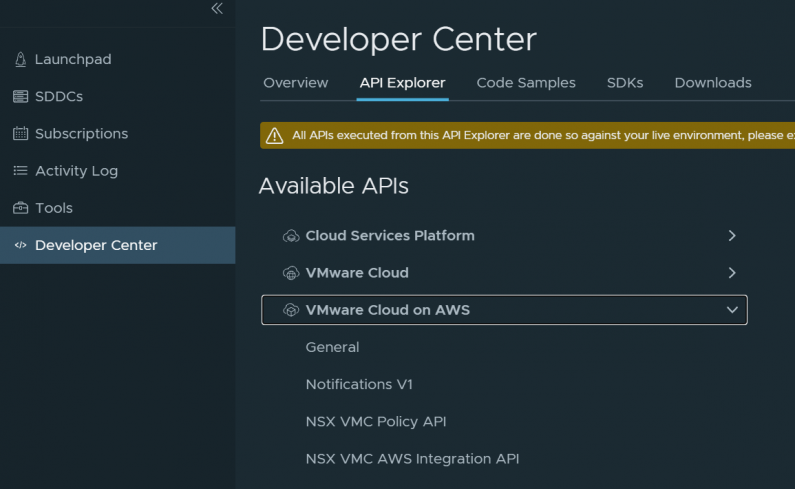

Invoking VMware Cloud on AWS REST API calls from Terraform

In this re:Post Article, I demonstrate invoking the VMC on AWS REST API from Terraform. There are often situations where […]

SDDC Import/Export for VMware Cloud on AWS – Part VIII – API Pagination

Building a Fling One of the fun things working for VMware is that anybody can create a Fling. If you […]

VMware Cloud on AWS Showback with vRealize Operations Manager

I had a customer ask me what options we have for chargeback/showback for VMs in VMware Cloud on AWS. vRealize […]

Python Client for VMC on AWS – Part II – More User Management

In Part I of this series, we examined some of the user management functions in v1.4 of PyVMC. In this […]

NSX Advanced Load Balancer – Part V – Application VIP Assignments

In Part I, we assigned the application VIPs a range out of the back half of the 10.47.165.0/24 subnet. This […]