Some VMs in my environment had virtual-mode RDMs on them, along with multiple nested snapshots. Some of the RDMs were subsequently extended at the storage array level, but the storage team didn’t realize there was an active snapshot on the virtual-mode RDMs. This resulted in immediate shutdown of the VMs and a vSphere client error “The parent virtual disk has been modified since the child was created” when attempting to power them back on.

I had done a little bit of work dealing with broken snapshot chains before, but the change in RDM size was outside of my wheelhouse, so we logged a call with VMware support. I learned some very handy debugging techniques from them and thought I’d share that information here. I went back into our test environment and recreated the situation that caused the problem.

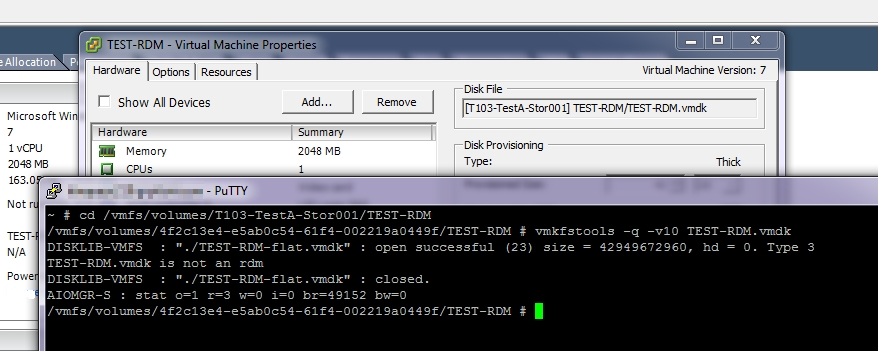

In this example screenshot, we have a VM with no snapshot in place and we run vmkfstools –q –v10 against the vmdk file

-q means query, -v10 is verbosity level 10

The command opens up the disk, checks for errors, and reports back to you.

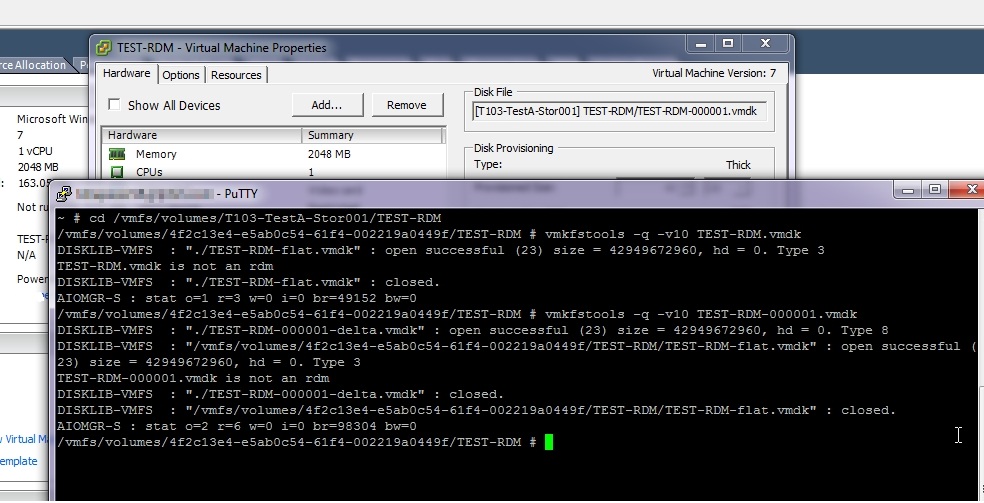

In the second example, I’ve taken a snapshot of the VM. I’m now passing the snapshot VMDK into the vmkfstools command. You can see the command opening up the snapshot file, then opening up the base disk.

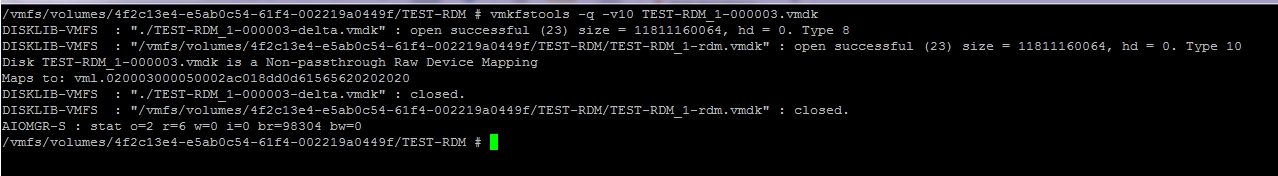

In the third example, I pass it the snapshot vmdk for a virtual-mode RDM on the same VM – it traverses the snapshot chain and also correctly reports that the VMDK is a non-passthrough raw device mapping, which means virtual mode RDM.

Part of the problem that happened was the size of the RDM changed (increased size) but the snapshot pointed to the wrong smaller size. However, even without any changes to the storage, a corrupted snapshot chain can happen during an out-of-space situation.

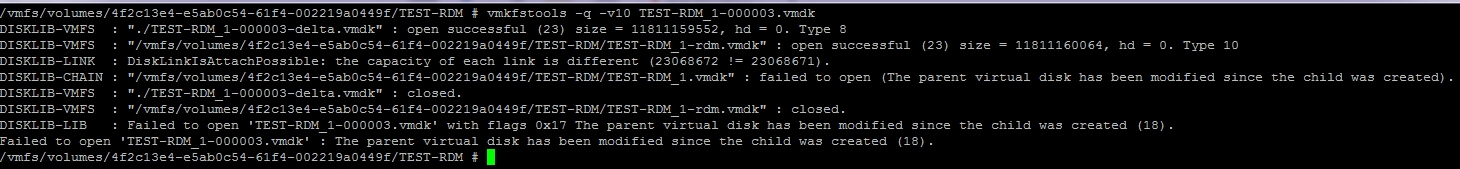

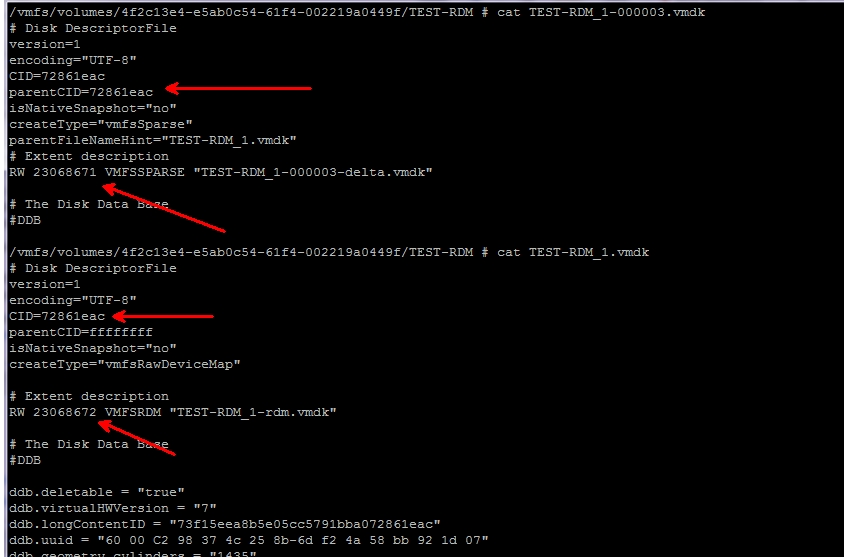

I have intentionally introduced a drive geometry mismatch in my test VM below – note that the value after RW in the snapshot TEST-RDM_1-00003.vmdk is 1 less than the value in the base disk TEST-RDM_1.vmdk

Now if I run it through the vmkfstools command, it reports the error that we were seeing in the vSphere client in Production when trying to boot the VMs – “The parent virtual disk has been modified since the child was created”. But the debugging mode gives you an additional clue that the vSphere client does not give– it says that the capacity of each link is different, and it even gives you the values (20368672 != 23068671).

The fix was to follow the entire chain of snapshots and ensure everything was consistent. Start with the most current snap in the chain. The “parentCID” value must be equal to the “CID” value in the next snapshot in the chain. The next snapshot in the chain is listed in the “parentFileNameHint”. So TEST-RDM_1-00003.vmdk is looking for a ParentCID value of 72861eac, and it expects to see that in the file TEST-RDM_1.vmdk.

If you open up Test-RDM_1.vmdk, you see a CID value of 72861eac – this is correct. You also see an RW value of 23068672. Since this file is the base RDM, this is the correct value. The value in the snapshot is incorrect, so you have to go back and change it to match. All snapshots in the chain must match in the same way.

I change the RW value in the snapshot back to match 23068672 – my vmkfstools command succeeds, and I’m also able to delete the snapshot from the vSphere client

Avraham

Thank you very much it was help for me!!!